In recent conversations with founders and investors alike, one theme keeps resurfacing: in today's AI landscape, switching costs are dangerously low. As someone who spends every day thinking about AI innovation and its long-term trajectories, I believe this is one of the most underappreciated risks (and biggest opportunities) in building enduring companies.

In venture investing, you're betting on companies over 5-10+ years. Traction today is meaningless if users can easily leave tomorrow. If a startup loses momentum because customers switch, regaining it is extremely difficult. Commoditization pressure is real, especially as many foundation models and AI tools increasingly resemble commodities (I highly recommend this article by my good friend Rob Toews.)

In this substack I wanted to outline Merantix Capital’s approach to dealing with switching costs, why they matter more than ever, how we believe startups can build defensibility, and how emerging technologies like continual learning might change the game.

In short, the goal of an AI founder should be to create switching costs so high that replacing their product feels like replacing a well-trained, high-performing employee -in other words, very difficult.

Why Switching Costs Are So Low in AI

APIs and models are interchangeable. Companies can swap model providers almost overnight. In many cases, swapping from one LLM provider to another is as simple as updating an API endpoint. I see this in my own day to day: I switch tools as soon as a slightly better, cheaper, or faster option appears.

The speed of innovation is relentless. New models and techniques are appearing every few months. Even companies with strong technical teams can be outpaced if they rely purely on product speed without building underlying defensibility.

B2B switching happens faster than consumer switching. Business users are rational, technical, and highly cost-driven. Unlike consumer behavior, which is often sticky due to habits, brand perception, or inertia, business decisions are made on ROI, performance, and cost…

Commoditization is accelerating. Models today largely deliver similar outputs, and continual learning is not yet widely adopted. This makes it very easy for users to move from one provider to another without any real switching pain.

Low integration depth. Many AI applications layer thinly on top of models without deep integrations into broader systems (e.g., ERP, EHR), making it easier for users to switch when something marginally better arrives.

How to build and evaluate high switching costs

To build truly durable companies in AI, founders must think deliberately about switching costs from day one.

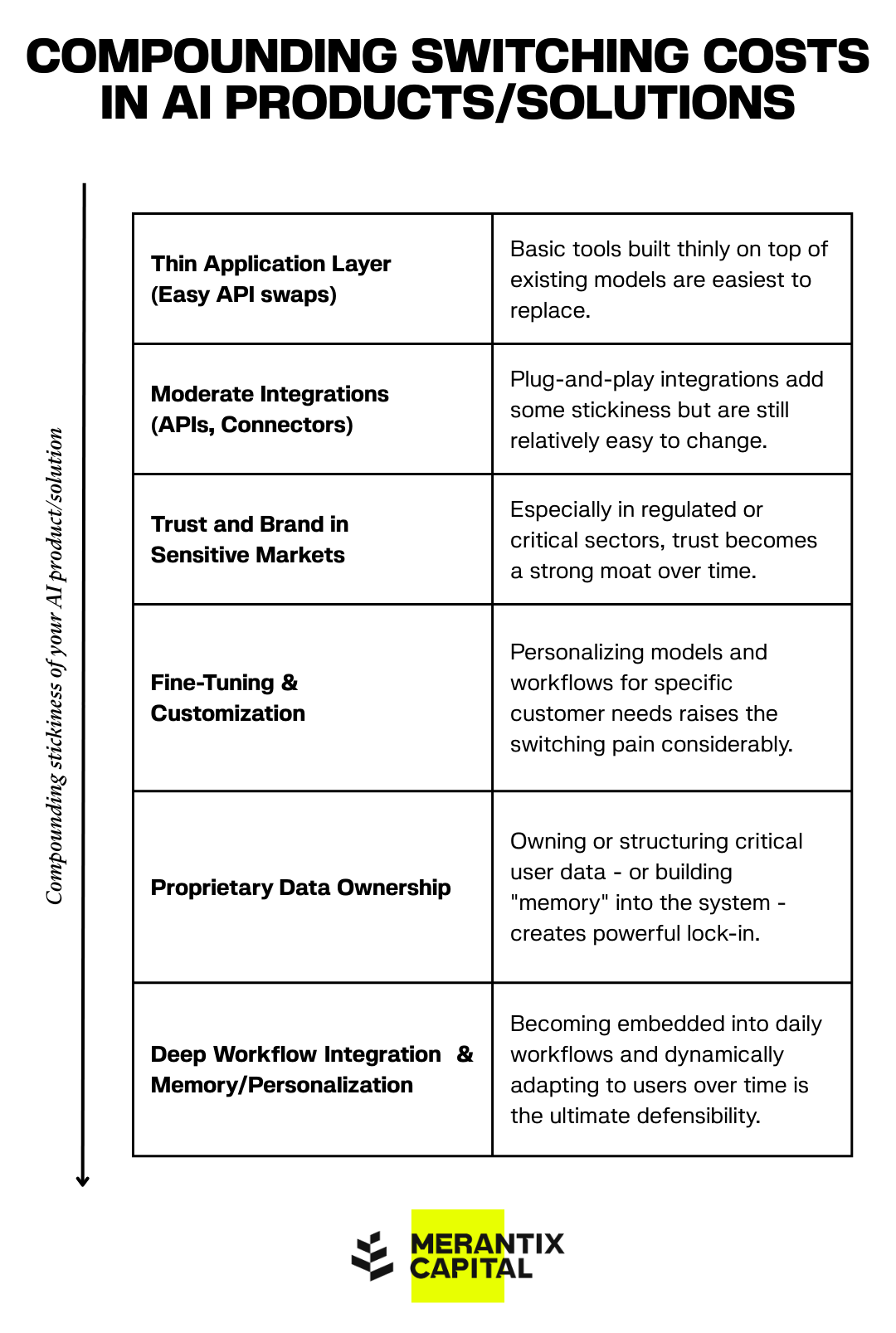

We find it useful to think of switching as compounding layers.

The deeper you move a customer down these layer, the harder it becomes for users to switch away:

Each layer deeper on this chart compounds the stickiness of your product.

The Emerging Frontier: Memory, Continual Learning, and Durable Moats

Today’s LLMs are powerful but fundamentally static: they learn during training and then stop adapting. True human-level AI requires models that can learn continuously throughout their deployment lifecycle.

Several workaround techniques exist today:

Fine-tuning: Helps update models post-deployment, but is batch-based, expensive, and limited.

Retrieval-augmented generation (RAG): External memory approaches are useful but face scaling and latency challenges.

In-context learning: Allows models to "simulate" adaptation during inference but without true weight updates.

However, none of these approaches solve the deeper problem of building durable, evolving AI models.

Continual learning, where models update their own weights on the fly without retraining from scratch, represents a profound shift. In his article, Toews argues that continual learning will, “unleash AI’s full potential to power hyperpersonalized and hypersticky AI products.”

In other words, imagine an AI legal assistant that becomes better at drafting documents in your firm's style over time. Or a recruitment AI that learns your exact criteria for screening candidates without explicit reprogramming. Models would develop deep, personalized knowledge that is hard to replicate elsewhere, creating switching costs akin to replacing a well-trained, high-performing employee.

Companies like Writer and Sakana AI are already experimenting with architectures that enable this self-evolving behavior. These breakthroughs hint at the future direction of defensibility in AI products.

How Founders Should Think About Switching Costs from Day One

Switching costs aren’t a "Series B problem." They must be part of the early design and go-to-market strategy:

Move fast but design for depth: Speed to market is crucial, but not at the expense of defensibility. Focus on integrations, memory, and workflow entrenchment.

Be intentional about data: Find ways to own, structure, or personalize user data.

Audit your defensibility constantly: Revisit the checklist regularly as the product evolves.

Think ahead to continual learning: Even if not feasible today, position your architecture for future personalization and adaptation.

Conclusion

In AI, defensibility is no longer just about building technically superior products. It’s about durability: how resilient your customer base is in the face of rapid technological change.

That's why I prioritize investments in startups that integrate deeply into critical workflows, prioritize user trust, create strong switching costs, and build toward the future of dynamically learning AI systems.

If you're building a company that understands this challenge and is actively working on durable moats in AI, I'd love to hear from you.